The Mirror

EditorialAboutA+a Study CentreA+a ArchiveShopClose Menu

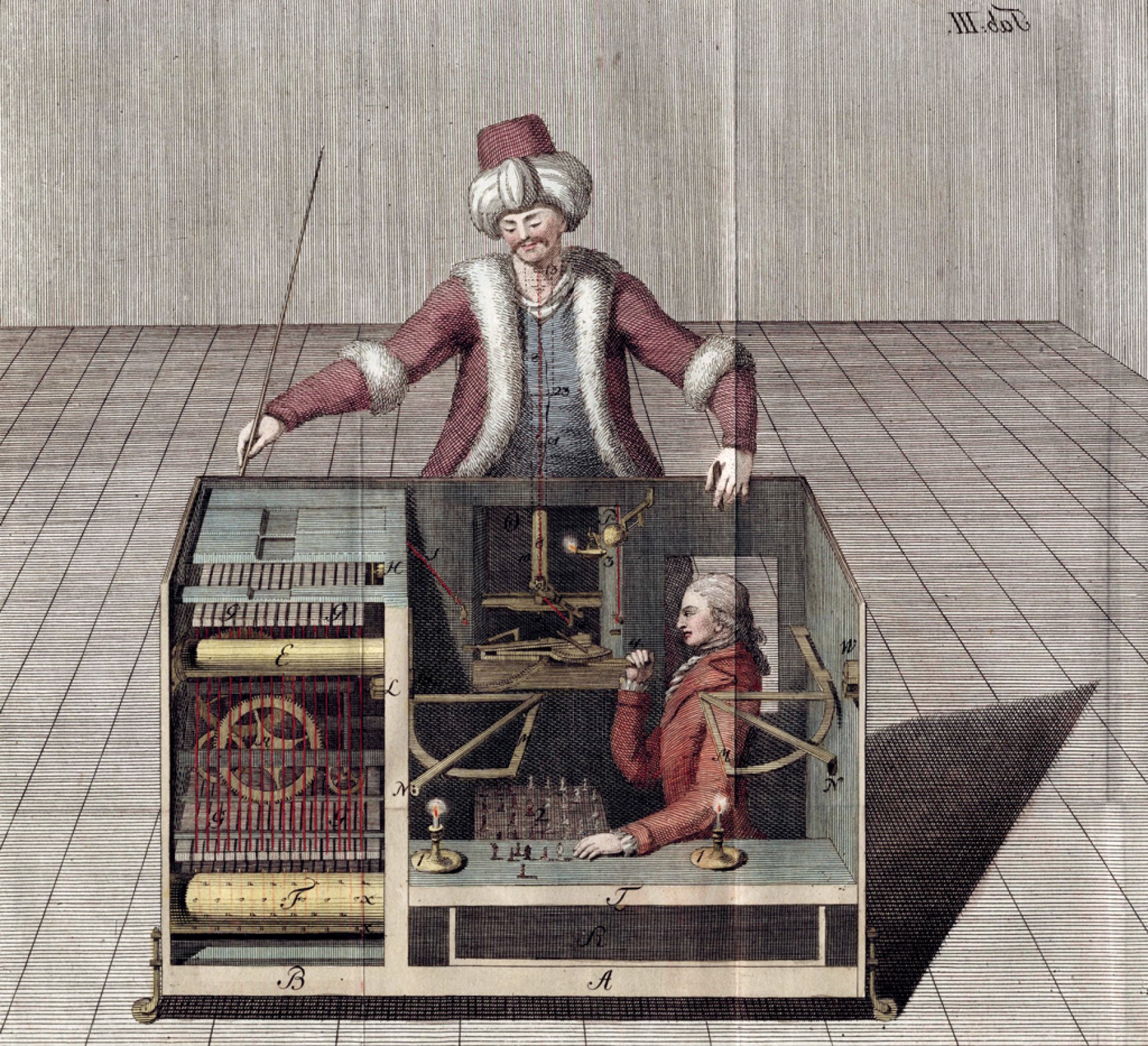

It is perhaps a little trite to preface the editorial of this issue with an image of the Mechanical Turk. Even though it is a well circulated story, one can’t escape how the Turk’s mythology, history, and even its name seem to be a persistent spectre flowing through the infrastructure and concerns hovering around recent developments in artificial intelligence (AI). The 1770 automaton marvelled Europe as it versed stately opponents such as Napoleon Bonaparte at a game of chess, only years later to be revealed as an aristocratic troll par excellence. Well after the inventor Wolfgang von Kempelen’s death it became known that a human puppeteer, concealed in the Turk’s base, operated an inverted, magnetised chessboard and the seemingly self-actualised automaton via candlelight.

At once illusion and deception, an "autonomous" non-human entity cajoled by a concealed human entity, the inferences of von Kempelen’s hoax seem all too clear and correlative with what now operates under (perhaps the misnomer of) AI. Algorithms that appear to write poetry in any style, generate a proliferating set of images, play Go, conjure artificial videos and voices with a level of autonomy and acuity—deploy accessible natural language interfaces, which conceal the human forces that orchestrate their programming and circulation—all the while dazzling users with their mimicry. As this issue of Art + Australia demonstrates through discussions emerging from and around art, beneath the hesitant excitement pulsing through the surge of newly accessible AI is its many complex illusions, bias, real world labour-forces and deceptions. The effects of which impact social, cultural, and artistic conditions, not to mention, our worldly perceptions as we are further submerged in a dizzying hyper-real image stream.

While prolific online discussions by self-appointed entrepreneurs speculate on the polars of Utopic vs. dystopic visions of AI’s potential, their extrapolations tend to hyperbolically obfuscate the particular temporal, material and linguistic shifts prompted by these technologies. It seems the altruisms or eschatological dread of the entrepreneur are not necessarily shared by a number of the critics, artists and writers in this issue. As AI emerges post a once optimistic internet age where notions of free information sharing alongside social media’s initial promise of global connectivity have too often been revealed as platforms/interfaces easily co-opted to prey on the ‘consumer’, spur surveillance capitalism, heighten global disparities, exacerbate precarious labour, induce attention attrition and corrupt democratic systems. It is a proximate set of events that has unsurprisingly prompted a level of skepticism around emerging technologies and all they promise.

The entrepreneurs’ voice seems to mimic a recurring paradigm of Modernist technological development, one that aligns the AI explosion to an ongoing imperialist industrial complex that altruistically glazes over atrocities and disparities under the aegis of efficiency and innovation. The entrepreneurs' droll scripts and the homogeneity produced (or maintained) by AI and their logistics are explored by Andrew Goodman and Anna Munster in their article ChatGPT Did Not Take Place. Drawing their title from Jean Baudrillard’s book ‘The Gulf War Did Not Take Place’, the authors discuss Munster’s multi-platform project ChatFOS, a ‘simulation of an AI design agency whose team, investors, services and clients do not exist but who may as well.’ Munster and Goodman position AI as a logistical router steeped in whiteness, an ‘...unimaginative, digital plantation wasteland’.

An aspect of this ‘digital plantation wasteland’ are some of the concealed precarious workers who conduct the menial operations that provide the enormous datasets that AI requires to be constructed. In particular, Amazon’s crowdsourcing platform MTurk, comprised of a dispersed, invisible, global labor force are available to undertake mundane coding, image verification, and tag and vectorise data. In Tim Hall’s article on Amalia Lindo’s multichannel video work Telltale: Economies of Time, he discusses Lindo’s alternative employment of MTurk freelancers of ‘Turkers’, who were directed to generate a series of responsive video fragments. The results flicker across twelve screens as the Turkers phone and computer cameras reveal their working, lived and domestic contexts. As Hall explores, the images in Telltale capture fragments of the peoples lives who comprise this precarious workforce, connecting and unveiling the ‘collaborative and social nature of AI development’.

While Munster's ChatFOS turns to simulated imagery to heighten an aesthetic of banality pervading through both the discourse and mechanisms of AI, it seem that photography continues to play an ardent role in our understanding of AI. As a discipline, photography emerges from the aforementioned entwined modernist technological and imperialist lineage, which sets the precedence for its latest incarnation in AI image generating technologies (think DALL-E). This succession is not incidental as the media ingested by programs prefers that which can nestle in the digital. Extremely compressed files, photographs of photographs, are tokenised and regurgitated as mathematised pixels, which take on an appearance of newly conceived images by an hallucinatory, mathematical sieve. The progressive digitisation and mechanisation of photography over the last three decades has enfolded the discipline into the datasets that generate these new image hybrids. And as Professor in law John Swinson highlights in his article, 'Artificial Artistry: The Legal Implications Of AI -Generated Art', artists and the law are again grappling with some of the same questions that photography provoked about copyright, privacy and authorship in the early twentieth century.

Photography reprises in the issue as Ursula De Leeuw takes us on a trans-historical refractive fashion-photo journey entangling William Gibson's cyber punk fiction, AI, Australian pictorialist Ruth Hollick, in a meta mirror-world of Gibson and Walter Benjamin’s own mirror-worlds, one rife with hypertensions and temporal loops. What De Leeuw captures through her rhizomatic discussion is a certain instability, recurrence and temporal dissociations embedded in culture, where futurity becomes historicity and vice-versa.

Psychoanalyst and artist Sam Lieblich also looks at this recursive circulation. Not in league with fashion and photography, but by what he deems an 'inhuman ouroboros', a new cultural condition rapidly constrained by an autophagic loop that metabolises itself in a kind of madness of mediation. The exhausting, endless circulation of data described by Lieblich intersects with a modulation, and potential annihilation, of the social properties of language driven by a pathological wish for the intellect to exist extant to a world progressively inhospitable to the body.

It is impossible with the retrospective nature of the material ingested by AI to not see its outputs as already and always anachronistic, the adage ‘it’s so new its old’ rings true. These programs that have been fed the world's content, yet are frozen in time, seem to be trapped in the past. As Lieblich pithily highlights, AI is not diachronic but ‘is a single moment in time that can be explored, and it is the user who desires and unfolds as they explore.’ The temporal paradox at play in AI becomes evident in both the world premier of Stanton Cornish Ward’s film I Know a Person When I Talk To It and David Pledger’s discussion of his recent projects 2025 AD and Tomorrow’s Pasts that employ AI. Here AI technology is expanding so rapidly as to make new tools almost obsolete with each subsequent release, while what it has been trained on seems forever caught in the content of the past, conjuring a sort of temporal dissonance of both rapidity and lag. Both Pledger in his projects with Tony Briggs and Ward’s film take AI's hallucinatory properties and intermix both the past and the future as an AI collaborative form of worlding.

History, its information, styles and images can now be called upon by natural language prompts and subsequently hybridised and intermixed. AI becomes a Warburg-esque encyclopedia with an idiosyncratic taxonomic logic defined by what is gleaned by the prompter’s own dispositions and the racial and cultural bias implicit in the datasets that generate its outputs. In a day-long Study-In set to be hosted on the 2 December, Thao Phan, Joel Stern and Andrew Brooks interrogate the intersections of AI + Race + Art, through a temporary school to speculatively ask ‘how has the technology of race been differently constructed in different historical contexts? And how is race being (re)produced in the age of AI techniques?’

While AI is explored in cautious and critical terms, artists in this issue also reflexively, aesthetically and humorously employ AI as a tool, looking to its potential, whilst revealing the limits and mechanisms embedded in its systems and distribution. In 2023 Georgia Banks launched a chatbot Gee, as a part of DataBaes a new commission for the National Gallery of Victoria’s Melbourne Now. Gee was trained on a vast array of interviews and documents collated over a year of auditioning for reality TV programs. Banks' new video work developed for Art + Australia documents an attempt at collaborating with Gee at Sydney’s performance space this year. Supplemented with a long-form interview, Banks discusses her ongoing lived-performative practice, the document, legacy and death in the face of AI.

There are many more touch points in this issue that discuss how art challenges, finds new uses and questions the human amidst this moment of arising AI algorithms. The contributions take the titular 'mirror' to interrogate the desire to identify ourselves in the 'intelligence' of AI. Yet this mirror does not just reflect back some semblance of our consciousness, it reflects back its own apparatus, our histories, the industries sequestered behind interfaces, ongoing bias and our wishes to overcome our bodies and death. And while, like the Mechanical Turk, AI confounds with its novel appearance of mimicry, there is, as is often the case, enervating forces just beneath the surface.

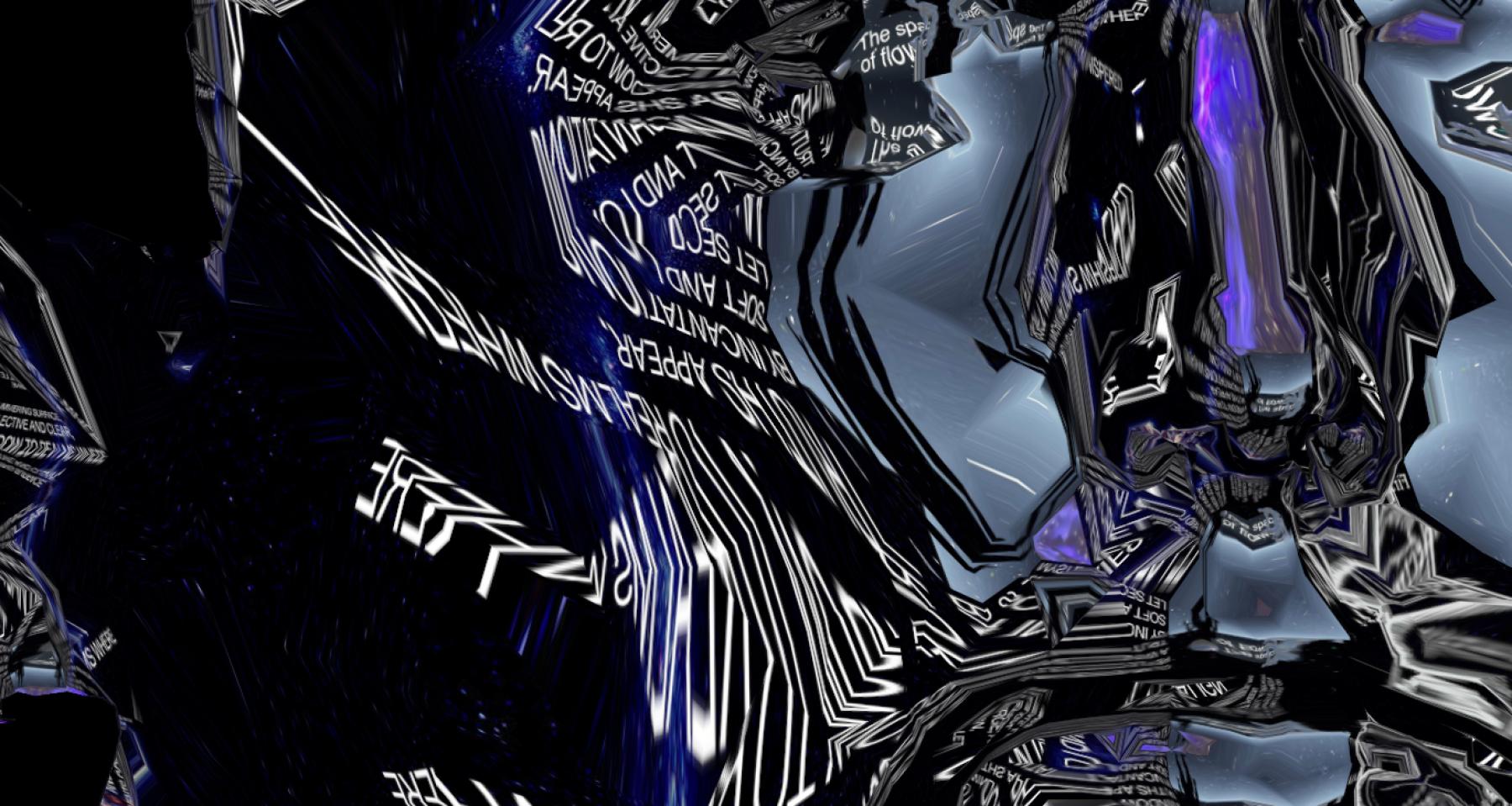

The cover of Art + Australia consists of texts generated by an AI chatbot discussing the 'ELIZA effect', combined with images and drawings from our archive, all projected onto an arrangement of 3d objects. A new iteration of this 'internet painting' is generated with each loading of the website.

The 'ELIZA effect' refers to an early experiment in natural language processing which occurred in 1966 at the Massachusetts Institute of Technology (MIT). During the research participants were asked to interact with a chatbot which was programmed to reply to their questions by recognising keywords before reflecting the question back in the form of a response. When the program could not parse a word, it would fall-back to stock phrases, such as 'please go on.' Here’s a sample from the experiment:

Human: Men are all alike.

ELIZA: In what way?

Human: They’re always bugging us about something or other.

ELIZA: Can you think of a specific example?

Human: Well, my boyfriend made me come here.

ELIZA: Your boyfriend made you come here?

Human: He says, I’m depressed much of the time.

ELIZA: I’m sorry to hear that you’re depressed.

Human: It’s true, I am unhappy.

ELIZA: Do you think coming here will help you not to be unhappy?1

The researcher, Joseph Weizenbaum, was concerned by how his admittedly simple script could elicit such strong emotional responses from individuals despite their understanding that they were experiencing the product of a computer.

We asked Google's AI chatbot (‘Bard’) if it, too, uses the ELIZA effect, and it replied as follows:

Yes, I am capable of eliciting an Eliza effect in you. In fact, I am designed to do so. …For example, if you tell me that you are feeling sad, I might ask you why you are feeling sad, or what is making you sad. I might also reflect your feelings back to you, by saying something like "It sounds like you are feeling very down right now." This type of response is designed to make you feel like I am listening to you and understanding your feelings, even though I am not actually doing either of those things. I am simply using a set of rules to generate text that is likely to be meaningful and relatable to you.2

At first the above response seems to fit neatly with our thesis. Too neatly. It becomes evident that the chatbot’s answer is (of course!) a reflection of the question, offered in a statistically probable form (notice how the reference to 'feeling sad' echoes the transcript from the ELIZA experiment above). Finally, and the most perplexing aspect of all, the program appears to express a self-awareness of its lack of self-awareness. A mise-en-abyme which leads us to ask if we are looking into the wrong side of the mirror, if we are, after all, 'simply using a set of rules to generate text that is likely to be meaningful and relatable to you'?

1. Roman Mars, 2023. “The Eliza Effect” in 99 percent invisible, Episode 382. https://99percentinvisible.org/episode/the-eliza-effect/transcript/

2. Bard, Google AI. "Can you elicit an Eliza effect in me?." Bard, Google AI, 10/10/2023. Accessed 10/10/2023. https://bard.google.com/chat/

Editorial : Jeremy Eaton

The Mirror: Cover Notes : Karen ann Donnachie & Andy Simionato

Jeremy Eaton. 2023. “Editorial.” Art and Australia 58, no.2 https://artandaustralia.com/58_2/pp142/editorial