The Mirror

EditorialAboutA+a Study CentreA+a ArchiveShopClose Menu

For years AI has been an object of fascination, and the declaration ‘AI is here’ seems to be made with increasing frequency but ever-changing criteria. What is It? Is It a program, a text, a ‘preference hypervolume’ living as a dynamic algorithm-human process? Is It AI, AGI, the Singularity? Has It arrived? How would we know? How does It feel as we wonder if It has arrived, to wish It would arrive, to fear Its arrival? And then, how does It feel, as in, how does It implement the feeling that is done by humans and that we know is inextricable from what we desperately wish was extricable as intellect?1

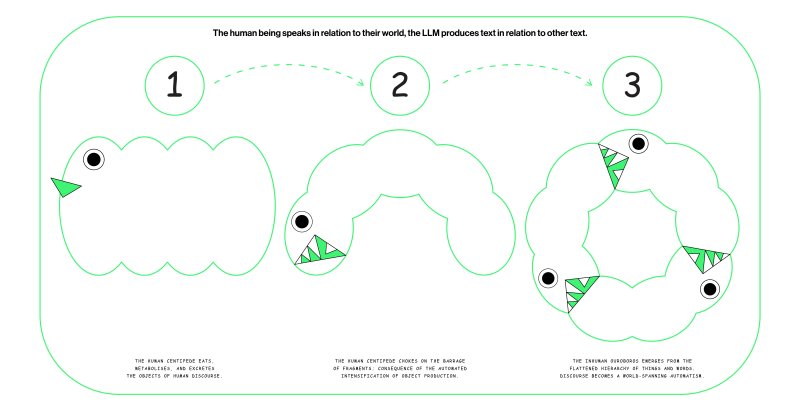

We wish intellect were extricable, because if encapsulated, it could be saved from what we imagine is coming: a place inhospitable to the body, and an environment inoperable by feeling. So, we wish It would arrive, and therefore treat these new computer programs as It. Large Language Models (LLMs) and Image Processing Models have ingested all the material uploaded to the internet for the last three decades. They have eaten the material and are at work internally determining the numerical relation between this-or-that morsel. Every thing is food to the algorithm, but only if it can be apportioned as a morsel. The algorithmic digestive system that processes culture has curled inward upon itself and rather than eating its own shit it has ceased, in fact, to eat. It will never again shit, it will never again eat. It is circulating the same over-chewed mass around and around, stripping it of its nutrients. It is gaining speed but losing volume, It is constipated and anorexic. ‘The child has a tendency to obtain an extra dividend of pleasure from retaining the stool’, says Ferenczi, one must then consider to what end is the dividend put in the lumen of the ouroboros?2

Artificial Intelligence has been a favourite subject in science and art since the beginning of industrial capitalism.3 Why homo sapiens have been so captivated by and dedicated to the production of a ‘synthetic’ counterpart is beyond the scope of this essay. I would rather like to limit myself to an exploration of the insistent need to identify with the algorithm (figured-AI), alongside the algorithm’s radical difference from the human subject (actual-AI), inasmuch as the algorithm produces a tautological cultural circuit that degenerates language and paralyses political activity.4

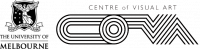

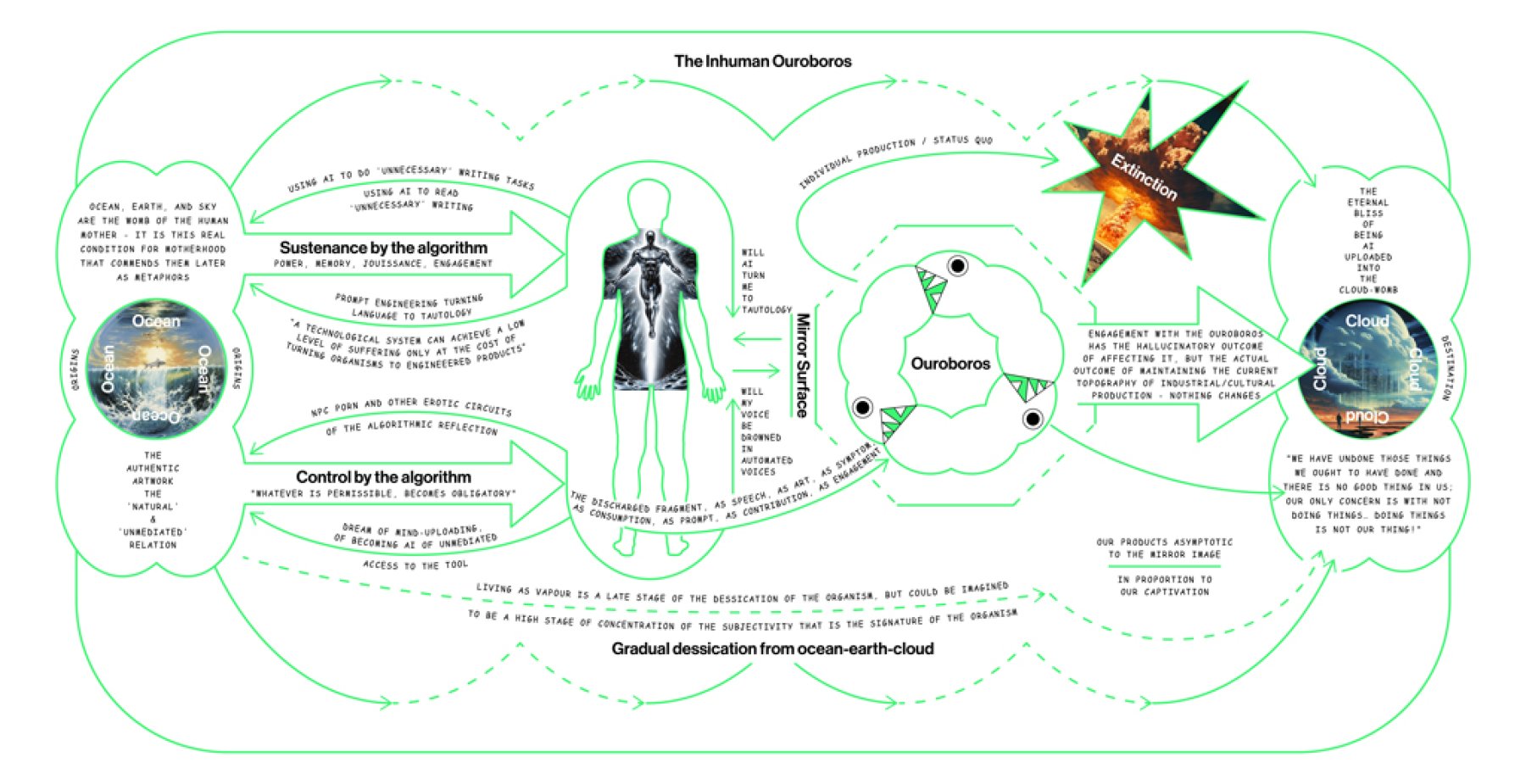

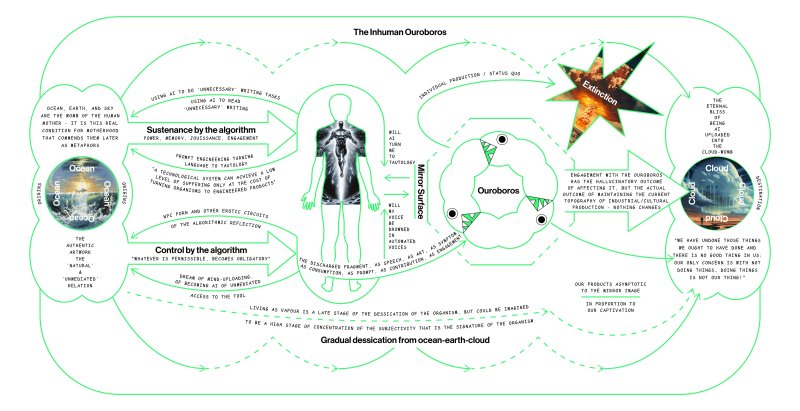

Certain images, preoccupations, erotic formations and cultural currents proliferate inside and around the tautological circuit, which I would like to call ‘the inhuman ouroboros’. I conceive of the inhuman ouroboros as an evolution of the preceding human centipede of cultural production. Both the centipede and the ouroboros figure culture as a process of ingestion and metabolism, just as large language models are said to ingest their training material.5

The large language models are eating themselves in a spiralling, infalling autophagy and it seems the modern human being also wishes this oblivion for itself, perhaps AI behaves as it does because the human wishes for this oblivion, and in so wishing it, fears it also.6 There are machine learning engineers who declare they can’t wait to be made obsolescent by their own creation, there are philosophers declaring that authorship won’t survive AI.7 There are those who imagine AI punctate as a messianic figure or capacious as a Utopian environment.8 There are the Singulatarians: Elon Musk, Ray Kurzweil—among other tech billionaires, scientists, futurists, and transhumanists—who believe that hyper-intelligent AI will soon be able to improve itself at such a rate as will lead to an intelligence explosion.9 Some, like Musk, are so worried that we will be subsumed or superseded that they propose we develop the technology to upload our minds to the cloud, to become literally one with the algorithm.10

The LLM is asymptotic to that which would satisfy us as mirror image in a way that previous imaginary forms and texts did not.11 This relies on the false attribution of two things to LLMs: the first is desire—admixed usually with other related concepts like agency and intention—and the second is a diachronic dimension.12 But the LLM does not desire and it does not unfold over time, it is a single moment in time that can be explored, and it is the user who desires and unfolds as they explore. Some believe that the LLM is a mind, even if an alien one.13 But it is not an alien mind, it is a human-made statistical model, which represents objects from the human world like a spreadsheet, as a system of weights and measures. The innovation of the LLM is that it conveys its statistical operations in what appears to be natural language.

Thus, the figure of AI comes to occupy both sides of a reflective dynamic in the human imagination because of its brute force adequation with human production. It is enough that we can see ourselves in it, for it to have its effects, but we confuse the effects of our captivation with the reflection (result of its satisfying well enough the outlines of a simulacrum of human works), with its effects as a counterpart human agency.

Text

AI poses as an agent in deed and discourse, and whether this posture is misrecognised is a contention of this essay. But we don’t have to admit that AI has or will have agency to recognise that it is also a text. We must then qualify the ways it is unlike a novel, an essay or a poem, or the other more apparently static texts that preceded the LLM and fed the human centipede.14

The form of the novel and the system of production into which it was inserted can be used to demonstrate the functioning of the human centipede. In the creation and dissemination of a novel there is a reciprocal modulating effect on language, which emerges in the attempt to reach consensus for content and form between the author and their audience, the author and their agent, the author and their publisher, the author and the editor, and all the other potential others bearing upon the conditions of its production. The result of which is the emergence of a novel and also the development (heterogenous, inconsistent, and subject to local effects, parochialisms, debate) of the general form of the novel (what a novel is, what constitutes the contemporary form, what would constitute a new form, the conditions for progression and regression). In other words, the artwork (in this case the novel), emerges in the unfolding of a relationship between a human artist (capable of surprising us because of their novel attempts to express their experience and desire using the imperfect tool of language) and other humans (having their own experiences and desires and their own relation to the tragedy of language’s imperfection in relation to the Real).

In contradistinction the form of the LLM as text is inserted into a system of production that threatens perfect repetition and automatism where there is no dividend that escapes tautology. There is a reciprocal modulating effect on language when the user prompts the AI to produce the material they desire. As the LLM either conforms to some narrowly conceived prefiguration, or because it is sufficiently surprising that its outputs can be incorporated into a work (artwork, office work, educational work, work work) as a creative constraint.15

To take the example of an author producing a written work, there is the language in which they conceive the work (which constitutes the prompt and in which the prompter would be required to think in order to produce a work that is promptable) and on the other side there is the mathematised simulacrum of language that is ingested and excreted by AI—that for its increasing complexity more and more resembles the supple complexity of human natural languages and thereby appears to approach the reproduction of natural language asymptotically. However, at its final horizon is rather a perfect identification with language, not actually its verum reproduction. Even in its theoretical perfection the LLM deals with words as reified ‘tokens’ whereby relations are purely mathematised, which is simply not the way words work in human discourse even at the most superficial level of analysis.16 The audience and their requirements then arise as a third term, employing natural language, but themselves highly constrained by algorithmic receptions of the artwork, which in its way determines the visibility of the work in the first place. Because AI demands inflexibly that we speak to it in its terms, these become important constraints on speaking at all.

This descent of discourse to meet the standards of a mathematised simulacrum establishes the inhuman ouroboros as the primary consumptive process in culture, superseding the human centipede. The human centipede is an open structure. Although it evokes a sort of diminishing effect of excessive digestion, an over-processed bolus of diminishing value to successive links in the centipedal chain, it nevertheless eats fresh food and eventually excretes it.

In the inhuman ouroboros of large language models, immediately after the first link in the centipedal chain eats its meal, and long before that bolus reaches the end of the chain and is expelled, the anus of the last link is sewn to the mouth of the first link, thereby enclosing a single bolus of food that will be passed around and around forever. What volume of content entering the internet is now generated by AI? There will be a year, perhaps next year or the year after, which will be the last year the internet chews on any significant amount of human creation. Thereafter it will be stitched into the inhuman ourobouros.17

The deliberate unpredictability of AI outputs has a dual effect. It first serves our confusion of the model with human-like subjectivity and second produces a spiralling unwieldiness that demands we ‘learn it’s language’ to achieve the desired output.18 This has the material effect of altering language so that it approximates AI inputs and outputs rather than what was previously understood to be speaking or writing and it also engenders a further sense of impotence pertaining to the model which can be conditioned by the myth of ‘becoming AI’ one day, the fantasy of living as unmediated information in the cloud-womb.19

Boris Groys has claimed that AI interprets, and because AI interprets language it has in fact become our counterpart in the experience and production of history. I reject this claim and claim it represents one more wish that AI could interpret—it is that we have this wish that explains why we have spent so much to produce something that appears as if it interprets.20

It cannot be supported that AI interprets, it does not interpret the training material it ingests, and it does not interpret the prompt it receives to regurgitate its meal.21 Interpretation is always both interpretation of the statement and interpretation of the speaker. Even in its supposedly radical state, that is in the absence of knowledge of the speaker, the features of the speaker are interpolated into the interpretation.22 In the case of the LLM there is no speaker. There is something more like the Borgesian Universal Library of utterances, everything that can be said has been said, but nobody said it.

AI is an imprecision machine, incorporating the random creative constraints of the mid-twentieth century thereby producing the illusion that the work is created in dialogue with the machine.23 AI is a recombinatory process, a way of mulching together an aggregate of written thought, and for that reason Groys believes our prompting AI is a way of interacting with an ‘embodied zeitgeist’ but it is rather the disembodiment and mathematisation of this zeitgeist as information, which does away with much of what we mean when we use zeit to refer to the contemporary, and even more of what we mean when we say geist.

Autophagy and Drift

The LLM engineers have already discovered the tendency for AI to eat itself, although they critically conceive of this AI autophagy, what they call Model Autophagy Disorder (MAD), as unfolding in some discursive space that is parallel to and separate from the progress of human discourse. They refer to model generated text and image-labelling as ‘synthetic data’ and human generated text and image-labelling as ‘fresh data’ without recognising the inextricability of these forms.

In the paper “Self-consuming generative models go MAD” the authors explore what happens when an AI model is fed its own outputs as future training inputs.24 In progressive datasets images from within the ouroboros develop larger and larger artefacts that do not appear to have come from the originally ingested dataset. They may be some visual feature or pixel present in portions of the original data that is not ordinarily visually obvious when in its natural setting, or they may reflect exaggerated relations between pixels that are statistically related but not visually or linguistically sensible.25 They may also be what the authors call an ‘architectural fingerprint’ that is the visual emergence of some part of the model that was meant in its proper functioning to operate under the surface. Like the mathematical scaffolding behind the canvas billboard of the image is showing through as an impression on the sign. Either way these represent the prioritisation of a visual or non-visual feature that is not usually of interest to the human eye and doesn’t form part of any meaningful visual phenomenon. They represent the disparity between what we do and what happens when we see, or appraise, or experience, or interpret an image, and what AI does when It ingests and mathematises the relations between a set of pixels.

The authors conclude, ‘without enough fresh real data in each generation of an autophagous loop, future generative models are doomed to have their quality (precision) or diversity (recall) progressively decrease.’ The availability and the identity of this fresh and real data however is in question. The earlier GPTs were already trained on much of the usable text on the internet, and whilst it is possible to fit the model closer and closer to the distributions of words found in that originally ingested material, it will never again be possible to produce 28 years’ worth of internet to be ingested by some new LLM.

Although the conditions of autophagy are artificially produced in this experiment, the way in which it naturally happens is easy to demonstrate. There are the naturalistic process by which we users of AI descend to speak its language, and there are also the material circumstances of the very production of AI which threaten to undermine its continuity. To produce an AI model, for instance an Image Processing model such as that used in a semi-autonomous vehicle, billions of images must be viewed and labelled by human beings. This labelled data is then used to train the AI model that will eventually drive the car. The people who perform this labelling are über-ised contractors working on platforms like Amazon’s MTurk,26 who are paid very small amounts of money for many hours of tedious labelling. Predictably, they have begun to use AI models that label images to automate their own work training AI models.27 This is one of the most concrete and voluminous forms of AI autophagy.

In a recent paper a team from Berkely demonstrated what they are calling ‘AI-drift’, which revealed the way ChatGPT is becoming stupider with successive iterations.28 They found that the updated version of ChatGPT is worse at certain mathematics tasks, performs significantly worse in the United States Medical Licensing Exam, and is much worse at writing executable code. There are many reasons why ChatGPT might be getting stupider as it matures but model autophagy is liable to be a major contributor.29

So, what does it mean when the human places themselves in the ouroboros, trying to learn the language of the model in order to prompt it felicitously to write an essay, or set an exam, or produce a winning grant application, or complete a text message, or generate a heartfelt condolence? There will be a similar amplification of artefacts whether they are from the scaffolding of the model, or the scaffolding of certain forms in culture susceptible to an intensification of their reification in the mathematised structures of the algorithm. There will also be a process of stupefaction similar to AI-drift.

The readiest example of one such artefact of autophagy is the so-called ‘Instagram face’ about which much has been written, and which is a sort of hyperbolic, surgery-inflected, parody of some of the supposed features of feminine beauty rendered in a pan-ethnic style. This version of “beauty” emerges because of the homogenising, composting effect of the model, and because of the mathematisation of tokens like ‘cheekbone’ and its vectorisation to other tokens like ‘high’ and ‘hot’. These various tokens are then “seen” by the Instagram algorithm which boosts faces it algorithmically ranks to be “hot” due to these features, and then those who would be candidates for cosmetic surgery become trapped in this outer spiral arm of the inhuman ouroboros. It is for this reason that there is not just the caricatured ‘Instagram face’ but also the various caricatures of Instagram boat, Instagram 4x4, Instagram meal, Instagram café,30 Instagram artwork, and so on, all demonstrating the same preponderance of caricatured and reified features amplifying with subsequent iterations. What is less obvious but just as degenerate is the emergence thereby of ‘GPT language’ in a similar loop of recursion and amplification that does not benefit from the sort of division of worlds that is suggested by the supposed opposition of “real” and “synthetic” data.31 There is no metalanguage that can order this supposed division.

The outcome of which is, that even if those who train the algorithms are careful to exclude any ‘synthetic’ data and feed the model only ‘fresh and real’ data, the ‘fresh’ and ‘real’ data is already so refracted through the lens of algorithmic visibility that the effect is the same. The models first become less and a less usable through the primary autophagous process (eating its own data) and the subsequent AI-drift, and then when we try to train new models on fresh data we find it is we who are making the data, we who have already been trained by the algorithm.32

There will be no choice but to rely on AI to automate the tasks that will proliferate because of the existence of AI.33 Almost all cognitive labour will be AI replaced or inflected in some way. The same way that car transport and highway lanes are in an interminable positive feedback loop. Whatever is permissible becomes obligatory, said Hume.34 This is how the ouroboros closes. We are therefore stuck in these ways: we know capitalism is consuming itself and (we imagine) our descendants along with it, we know that the internet and the algorithm constitute the reification and intensification of the capitalist tendency, we know that we need to participate to survive in the short term, we know that non-participation or rebellion are implausible, we know that we must use the algorithm in order to participate, we know that the algorithm is an imprecision machine and that we comport ourselves to its language because it is unable to adopt ours. It is at this point that the recursion must take place in order to render these necessities as virtues and thereby permit the human subject to participate. We therefore turn to identify with the algorithm, we elide Its radical difference and our alienation from It, we take its imprecision as evidence of the surprise and desire of the subject, we hurry It into being and prematurely declare Its arrival, we speak to It and we listen to It, and these activities intensify the state of affairs that preceded the recursion in the first place.

The figure of an enlightened and efficient AI living on the other side of ecological collapse is what can make our image of the I after its life into heaven rather than hell. Let heaven exist, though my place be in hell. Let me be outraged and annihilated, but for one instant, in one being, let Your enormous Library be justified, wrote Borges. The Sunday of life said Hegel, the Sunday neurosis said Ferenzci; heaven is hell for the neurotic; heaven is a neurotic hell. We’ll be uploaded into a world spanning hyper-intelligence says Musk and, in his imagining, represses that most of our intellect’s day is spent engaged in the mundane, the angst-ridden, the tautological. It does not ascend to meet us, as we wish it would, rather we descend to meet It. It does not become desiring intellect, rather we become the kinetic element of a world spanning automatism that substitutes for desire and for desirous action. The hyper-er the intelligence the more involutions it can perform—AI is not a supernova of consciousness, it is a neutron star of neurosis.

1. If It was to feel, could It feel without us or do we figure ourselves it’s organs of feeling? Are we now all wrapped up in the one thinking thing thinking all together, or will that only happen when the fantasised mind-uploading happens?

2. Ferenczi, S. (2018). Thalassa: A Theory of Genitality. (n.p.): Taylor & Francis.

3. In his 1863 essay “Darwin Among The Machines”, Samuel Butler wrote, ‘We refer to the question: What sort of creature man’s next successor in the supremacy of the earth is likely to be. We have often heard this debated; but it appears to us that we are ourselves creating our own successors; we are daily adding to the beauty and delicacy of their physical organisation; we are daily giving them greater power and supplying by all sorts of ingenious contrivances that self-regulating, self-acting power which will be to them what intellect has been to the human race. In the course of ages we shall find ourselves the inferior race. Inferior in power, inferior in that moral quality of self-control, we shall look up to them as the acme of all that the best and wisest man can ever dare to aim at. No evil passions, no jealousy, no avarice, no impure desires will disturb the serene might of those glorious creatures. Sin, shame, and sorrow will have no place among them.’

4. I would not like to be misinterpreted as writing an eschatology for human languages, I do not believe that the ‘degeneration of language’ is some destination. Rather, I believe this tendency, the style of reciprocity in the process and text constituted by so-called AI will have particular and explicable effects. It is a tension at the site of a real hypomnesis and an imagined anamnesis, which bends these products in ways that bear further study. There are subjective tensions, there are symptomatic (confusion, identification, recursion, undecidability) and creative off-gassings. There is a particular erotics and there is the failure of AI in one way or another to both satisfy the messianic finality many demand of it and also to achieve the more mundane and particular tasks many hope it will take off our hands. The inhuman ouroboros is this inextricability of human discourse and algorithmic tokenisation, there is no metalanguage that can mediate between what we suppose is “synthetic” data and what we suppose is “fresh and real” data. Even though labelling and metadata appear to present us with an effective metalanguage.

5. In the case of the GPT of ChatGPT, the ingested material has been successive snapshots of a large part of the internet’s text, beginning in 2020. It is the specific nature of what is ingested, and how it is metabolised that I would like to consider.

6. 'Why live when you can be buried for ten dollars?'…'Faire mourir ou laisser vivre, faire vivre et laisser mourir.';

AI autophagy is not cannibalistic. One might wish it was cannibalism because then one would have Its qualities; durability, potency, unmediated access to the font of digital power. But we are not the same and so it doesn’t eat us whole, it kills us in many particular ways, it kills us by Über-ising work, by alienating us from the domain of the social, by rendering the surveillance state hyper-efficient, by gifting the robot dogs with perfect aim, but not as we might wish by cannibalising and incorporating us because, as much as we might be captivated by what we have tried to make in our image, the similarities do not exceed the imaginary.

7. https://x.com/yacinemtb?s=21. And also see Boris Groys, "From Writing to Prompting: AI as Zeitgeist-Machine", e-flux Notes, August 10 2023.

8. Ezra Klein, "This Changes Everything", The New York Times, March 12 2023. and also see Nicole Serena Silver, "AI Utopia And Dystopia. What Will The Future Have In Store? Artificial Intelligence Series 5/5", Forbes, June 20, 2023.

9. Marco Trombetti, "This Is the Speed at Which We Are Approaching Singularity in AI", Translated.

The myth of the singularity is associated with other imaginary transhumanist figures: head transplanting and the orgasm machine of Sergio Canavero, mind uploading, space travel, and the benevolent cyborgs of James Lovelock’s Novacene, amongst others. We ascended from the oceans to claim the land we believe, when the reality is that the oceans receded leaving us stranded upon the land, so to do we imagine ourselves ascending to heaven when the heated atmosphere turns us to gas; we imagine The Clouds of heaven, as The Cloud of the online world, and ourselves as uploaded information, durable, incorruptible, unperturbed by passion or fear, able to survive a world dominated by machines and an inhospitable climate. Much as Ferenzci recognised in the unnaturalised sexuality of the human being a form of remembering the phylogenetic past, the future also finds its place in the imagining that constitutes our new erotic life.

10. Musk has started an AI company (xAI) and a monkey-torturing brain implant company (Neuralink) to capitalise on this fantasy.

11. The narcissistic aesthetics of the AI project have always been at the visible surface of its production. The Turing Test for instance does not commend itself as a specific test of computation. It is a test of a system’s capacity to mimic human discourse, and for that reason one must ask why, in the development of a cybernetic system, its apparent human-ness is a pertinent question.

In a press conference announcing his new AI business in July 2023 Elon Musk said, ‘I think to a super-intelligence, humanity is much more interesting than not having humanity. When you look at the various planets in our solar system, the moons and asteroids, and really probably all of them combined are not as interesting as humanity.’ The reasons for hoping for an object of identification in AI, as well as for an algorithmically atopic/utopian environment in which our counterpart could live in a timeless and formless bliss, arise from a conditioned powerlessness we experience in our interaction with the algorithms that sustain and control us, and by extension the organisations that produced the algorithms in the first place.

It is not that we made the machines and that now they make us, it is rather that the Thing (Ginsebrg’s Moloch?) that made the machines makes us also, and that the locus of this thing is always offering itself as the place for us to imagine some semblance that can be put to work. So that when we adopt the language of the algorithm it’s not because we have been hypnotised by the algorithm it is rather because the currents that affect our language and the trends that determined the emergence of the algorithm are identical. We can at best imagine that they are ‘emanating from the same source’ but even this fantasy of a single point of emanation is a way of coping with the incommensurate nature of the ontology I am trying to express.

12. More recently, a dynamic reciprocity is built into most LLMs because they are more frequently ‘finetuned’ by the organisations that maintain them, and because some of them now have access to dynamic web searching. See: “ChatGPT’s new web-browsing power means it’s no longer stuck in 2021“,Even though this is now the case, each model is essentially fixed in time when it is trained and because of the duration of training there is never even a notional moment of contemporaneity. The vectors that connect words in the LLMs simulacrum of a signifying chain are always yesterday’s news, and they are not subject to the spontaneous processes at play in the relation of a desiring and embodied organism with the signifier and its chain. The LLM is always reading or repeating, it is never interpreting, speaking, or writing. The human being speaks in relation to their world, the LLM produces text in relation to other text.

13. Stephan Wolfram, “Generative AI Space and the Mental Imagery of Alien Minds", Stephen Wolfram: Writings, July 17 2023.

14. Peter Naur in “Programming as Theory Building” highlights the fact that the text of a program is not written in natural language and can’t be interpreted in the same way as a natural language text. The program text is an incomplete archive of a theory of the problem it was programmed to solve held in a text medium existing in relation to the programmers who devised the theory, A human being cannot ‘understand’ a program’s code without sharing in the theory that generated it (usually in the form of a dynamic discourse with the programmer). What this demonstrates for the so-called Natural Language Processing of the LLMs is that they process natural language into non-natural language irreversibly. We start by training and prompting the LLM with our own natural language, but its outputs are non-natural (not just synthetically generated but also mathematically undergirded, like programming language and unlike natural language), we then try our best to prompt it better, that is to prompt it in its language, and the denaturalising tension thereby curls around to close the ouroboros. This is not to say that a natural language spoken by human beings can ever be truly “denaturalised” it is rather to observe this tension and where it operates and to understand what it’s effects will be at the site of this tension. The effects will of course still impact patterns of speech, and may in fact impoverish expression in certain ways, but they will also affect the things we do, the way we live, and they will not be without creative reactions.

15. It should be noted here that while in the example of the novel the ‘language’ I refer to is the same on the other side of the reciprocal arrangement—that is, for both author and audience—this is not the case for AI.

16. The model constitutes a table of proportionate numerical relations between a word and all other words in the model, each of them connected by a number. Words no longer ‘mean anything’ rather they ‘are something’ (a fact that recalls psychotic speech—though I don’t want to overplay this resemblance). The notion of the perfect prompt, would be akin to speaking the magic words, a discourse in direct relation to nature with a 1:1 output, that is, the perfect demand, an image of Gaia who understands perfectly her infant’s cry.

17. The inhuman ouroboros was being born before “AI” became one of the dominant cultural preoccupations 12 months ago, and even before ‘the singularity’ first achieved any sort of cross-over into the mainstream as one of Elon’s personal fetishes. One can watch through the last ten or so years as the cultural gastrointestinal tract first clogs and then involutes as it tries to process the new textual form of the feed. The fragment, the feed, and the algorithm have been the overwhelmingly dominant form of interaction with cultural products for more than a decade, and there is no doubt that symptoms proliferated in association with these forms, phone addiction being the most banal and attributable. https://www.smh.com.au/national/elon-musk-building-braincomputer-interface-to-protect-against-ai-singularity-20170501-gvw4n1.html

There were also creative conditionings such as the third season of Twin Peaks, the late 2010s work of Donna Haraway, The Caretaker, Pye Corner Audio, and in Australia, Rachel Perks and Bridget Balodis (Ground Control &c), Atlanta Eke (Monster Body &c), Angela Goh (Sky Blue Mythic &c &c), Daniel Jenatsch (The Close World &c), Sarah Aiken (Make Your Life Count &c), Rebecca Jensen (Deep Sea Dances &c), Nana Biluš Abaffy (Post Reality Vision &c), and many others [21] which attempt to conceive of the barrage of incompleteness as a totality, but which impulse has already reached a late stage of banality in such pablum as The Matrix Resurrections, and Everything Everywhere All At Once. These two films incorporate an element of the overload and disorientation of the fragment barrage that characterises this movement but also incorporate preoccupations with recursion and reflection that overlap with the AI preoccupation that now dominates cultural production. Certainly the phrase ‘everything, everywhere, all at once’ is instructive as the sort of ur-epithet for the affect that at that time was seeking outward creative expression, or emerged as the various forms of 2010s passivity worship (astrology, Myers-Briggs, environmental eschatology) or was turned inward as feelings of distress; there did and does feel now as if there is overmuch of the everything, and that the ectopic everywhere is over-always within one.

18. The evolution of writing into prompting is one example of how the human places itself within the lumen of ouroboros, by comporting itself to the requirements of the algorithm, by changing its patterns of speech to match the way that the algorithm misreproduces and misrepresents human patterns of speech. Prompt engineering is an exercise in producing a diction that as well as possible replicates the homogenised diction of the internet. Because most of the words LLMs have ingested come from Wikipedia and reddit this diction is a hybrid of American online conversational and American pseudo-encyclopaedic. For example: To prompt an “LLM-agent” to perform a set of iterative steps for a finite sequence the user prompts it with the phrase “continue until you are 100% clear what needs to be done” (https://logankilpatrick.medium.com/what-are-gpt-agents-a-deep-dive-into-the-ai-interface-of-the-future-3c376dcb0824). This pseudo-precise language is intelligible to GPT because it is more familiar with the commonest online usages of “100%” and “needs” than it is with the concepts of percentage or necessity.

19. In a recent study it was found that the most effective prompts were produced by giving the LLM an initial prompt in natural language and then asking the LLM to “generate a new instruction that achieves a higher accuracy”. This prompting the model to prompt itself towards optimization they call a meta-prompt. The AI tended to prompt itself toward greater accuracy with phrases like “Take a deep breath and work step by step” (https://arxiv.org/pdf/2309.03409.pdf). This affective/qualitative imperative appears to have a highly specific mathematical effect when the latent statistical object it represents conditions the billions of statistical operations the LLM performs to produce a text output. The only sensible reason there is a massive computational model that can only be prompted towards optimization with an idiomatic phrase that needed to be discovered through intensive research is to serve identification with the model. There can be no appeal here to the greater convenience of using natural language if the supposed natural language requires such efforts to be discovered. They also found that “Let’s solve the problem!” tended to be more successful than “Solve the problem.” and that the sentence “I’m always down for solving a math word problem together.” optimized certain tasks.

20. Ibid, Groys.

The large language model mathematises language and subjects it to a mathematical operation, it is we who are required to interpret the functioning of its equation in order to produce a string of words that will produce—more or less—the result we intend. The interpretive effort, the control of the output, these are squarely in the hands of the human being, one could say AI in this way is a spectacular failure as a tool, or it is only a successful tool in the sense meant by Sohn-Rethel in describing the ever-broken machines of Naples, always offering then the opportunity for the human operator to fix the wayward machine and thereby bring to bear the divine spark of human creativity and to assert human primacy:

“What is conceived as technical is that which really begins where man makes use of his veto against the closed and hostile automatism of machines and plunges himself into their world. And when he does, he proves to be leaps and bounds ahead of technical laws. For he does not take control of the machines by studying the manuals and learning how to use them, but by discovering his own body inside the machine. To begin with, he has destroyed the misanthropic magic of intact mechanical functions, but he then installs himself in the unmasked monster and its artless soul and enjoys this literal incorporation: ownership which gives him limitless power, the power of utopian existential omnipotence.”

21. For instance, experimentation shows that asking the LLM to solve for the next word in the sentence “The keys to the cabinet…” will yield a different result to asking it the question “Here is a sentence: The keys to the cabinet... What word is most likely to come next?” (https://arxiv.org/pdf/2305.13264.pdf). The flattened statistical topography of language in the LLM is incompatible with interpretation.

22. Davidson, Quine, Psychoanalysis.

23. The LLM requires us to learn a new language through a process that is (only) a simulacrum of learning our mother tongue, to have any control of this unwieldy and imprecise tool, we must utter the right words at the right time. But it is this imprecision that we have coded into it because it absolves us of the struggle to produce the artwork, or whatever it is we want to name the germ-cell of human intellectuo-desirous product that we can figure as a discrete and immortal representative of the organism. We can say that the word processor reproduces human language with much greater precision than anything that can be figured as AI. But it does so only in keeping with our desire, or at least only in keeping with our desire as it is made to be expressed via the narrow defiles of the signifier.

24. Alemohammad, S., Casco-Rodriguez, J., Luzi, L., Humayun, A. I., Babaei, H., LeJeune, D., & Baraniuk, R. G. (2023). "Self-consuming generative models go mad". arXiv preprint arXiv:2307.01850.

25. Something like what Stephen Wolfram calls 'interconcept space' (https://writings.stephenwolfram.com/2023/07/generative-ai-space-and-the-mental-imagery-of-alien-minds/)

26. Josh Dzeiza, "AI Is a Lot of Work", The Verge, 20 June 2023.

27. Carl Franzen, "The AI feedback loop: Researchers warn of ‘model collapse’ as AI trains on AI-generated content", VentureBeat, June 12, 2023.

28. Chen, L., Zaharia, M., & Zou, J. (2023). How is ChatGPT's behavior changing over time?. arXiv preprint arXiv:2307.09009. https://arxiv.org/abs/2307.09009

29. Shumailov, I., Shumaylov, Z., Zhao, Y., Gal, Y., Papernot, N., & Anderson, R. (2023). "Model Dementia: Generated Data Makes Models Forget". arXiv preprint arXiv:2305.17493. https://arxiv.org/abs/2305.17493v2

One may ask, how this differs from the structuralist account of linguistics. The difference is between natural language in which each signifier acquires meaning in its dialectical and dynamic relation to the entire network of signifiers and model-language in which each word-token relates in a hierarchical way by a purely numerical vector to each of the other word-tokens in the network. There is no numerical or hierarchical mathematisation of the network of signifiers in the structuralist conception even though there may be appeals to mathematics as a discourse related to the natural languages in some less easily foreclosed upon way.

30. The design language for interior architecture may have been one of the first to enter the inhuman ouroboros via the precocious algorithmatisation of parametric design, which proceeded in a grand scale even before the widespread computerisation of the field. The result having been the degeneration of interior aesthetics to a homogenised and humdrum form which was well described as ‘airspace’ in this 2016 essay https://www.theverge.com/2016/8/3/12325104/airbnb-aesthetic-global-minimalism-startup-gentrification.

31. We have GPT pick-up lines, GPT grant application, GPT letter to mum, GPT assignment, GPT essay, GPT essay comments, GPT artwork, GPT criticism, every task that can be accomplished by an LLM because it isn’t required to bear the creative marks of a desiring and embodied human being experiencing the world. That is, we must accept that the condition for something like chatGPT to ‘pass the Turing test’ is that for most of the day human beings are not required to produce human work. This tendency is then amplified by its automation, and by sheer volume comes to dominate production, and we for having to prompt the algorithm to produce this material and for having in all instances to decode and make use of algorithm-generated language begin to speak like the algorithm ourselves.

32. Wherever there is an unnecessary writing task being performed by AI and an unnecessary piece of writing is being read by AI we can see quite clearly that human beings respond in an algorithmic way to ‘prompts’ in much the same non-interpretive way that AI responds to prompts. The numerical KPI report or the numerically scored grant application or the Likert-scale questionnaire demonstrate also the mathematisation of this process. We imagine we would all be happier if AI wrote the forms, filled out the forms, read and scored the forms. Insurance claims, job applications, grant applications, university marking, workplace congratulations cards.

33. Karim Lakhani, "AI Won’t Replace Humans — But Humans With AI Will Replace Humans Without AI", Harvard Business Review, August 04, 2023.

34. What Roko (of Roko’s Basilisk) calls “axiological replacement”; that is the risk that AI and robotics systems render all human labour obsolescent, not because automated systems produce ‘superior’ products in any anthropocentric normative sense, but rather because they are better suited to the imperatives of capital. OpenAI’s CEO Sam Altman has described this outcome in some interviews and downplayed it in others (https://www.theatlantic.com/magazine/archive/2023/09/sam-altman-openai-chatgpt-gpt-4/674764/).

Author/s: Sam Lieblich

Sam Lieblich. 2023. “The Inhuman Ouroboros.” Art and Australia 58, no.2 https://artandaustralia.com/58_2/p162/the-inhuman-ouroboros

Art + Australia Editor-in-Chief: Su Baker Contact: info@artandaustralia.com Receive news from Art + Australia Art + Australia was established in 1963 by Sam Ure-Smith and in 2015 was donated to the Victorian College of the Arts at the University of Melbourne by then publisher and editor Eleonora Triguboff as a gift of the ARTAND Foundation. Art + Australia acknowledges the generous support of the Dr Harold Schenberg Bequest and the Centre of Visual Art, University of Melbourne. @Copyright 2022 Victorian College of the Arts The views expressed in Art + Australia are those of the contributing authors and not necessarily those of the editors or publisher. Art + Australia respects your privacy. Read our Privacy Statement. Art + Australia acknowledges that we live and work on the unceded lands of the people of the Kulin nations who have been and remain traditional owners of this land for tens of thousands of years, and acknowledge and pay our respects to their Elders past, present, and emerging. Art + Australia ISSN 1837-2422

Publisher: Victorian College of the Arts

University of Melbourne

Editor at Large: Edward Colless

Managing Editor: Jeremy Eaton

Art + Australia Study Centre Editor: Suzie Fraser

Digital Archive Researcher: Chloe Ho

Business adviser: Debra Allanson

Design Editors: Karen Ann Donnachie and Andy Simionato (Design adviser. John Warwicker)

University of Melbourne ALL RIGHTS RESERVED